Rajiv Shringi Vinay Chella Kaidan Fullerton Oleksii Tkachuk Joey Lynch

As Netflix continues to increase and diversify into varied sectors like Video on Demand and Gaming, the flexibility to ingest and retailer huge quantities of temporal knowledge — typically reaching petabytes — with millisecond entry latency has develop into more and more very important. In earlier weblog posts, we launched the Key-Worth Knowledge Abstraction Layer and the Knowledge Gateway Platform, each of that are integral to Netflix’s knowledge structure. The Key-Worth Abstraction affords a versatile, scalable resolution for storing and accessing structured key-value knowledge, whereas the Knowledge Gateway Platform gives important infrastructure for safeguarding, configuring, and deploying the information tier.

Constructing on these foundational abstractions, we developed the TimeSeries Abstraction — a flexible and scalable resolution designed to effectively retailer and question massive volumes of temporal occasion knowledge with low millisecond latencies, all in a cheap method throughout varied use circumstances.

On this put up, we are going to delve into the structure, design ideas, and real-world purposes of the TimeSeries Abstraction, demonstrating the way it enhances our platform’s skill to handle temporal knowledge at scale.

Observe: Opposite to what the identify might recommend, this method isn’t constructed as a general-purpose time collection database. We don’t use it for metrics, histograms, timers, or any such near-real time analytics use case. These use circumstances are nicely served by the Netflix Atlas telemetry system. As a substitute, we deal with addressing the problem of storing and accessing extraordinarily high-throughput, immutable temporal occasion knowledge in a low-latency and cost-efficient method.

At Netflix, temporal knowledge is repeatedly generated and utilized, whether or not from consumer interactions like video-play occasions, asset impressions, or advanced micro-service community actions. Successfully managing this knowledge at scale to extract invaluable insights is essential for guaranteeing optimum consumer experiences and system reliability.

Nonetheless, storing and querying such knowledge presents a novel set of challenges:

- Excessive Throughput: Managing as much as 10 million writes per second whereas sustaining excessive availability.

- Environment friendly Querying in Massive Datasets: Storing petabytes of information whereas guaranteeing main key reads return outcomes inside low double-digit milliseconds, and supporting searches and aggregations throughout a number of secondary attributes.

- International Reads and Writes: Facilitating learn and write operations from anyplace on the earth with adjustable consistency fashions.

- Tunable Configuration: Providing the flexibility to partition datasets in both a single-tenant or multi-tenant datastore, with choices to regulate varied dataset features equivalent to retention and consistency.

- Dealing with Bursty Visitors: Managing important visitors spikes throughout high-demand occasions, equivalent to new content material launches or regional failovers.

- Value Effectivity: Lowering the price per byte and per operation to optimize long-term retention whereas minimizing infrastructure bills, which might quantity to thousands and thousands of {dollars} for Netflix.

The TimeSeries Abstraction was developed to fulfill these necessities, constructed across the following core design ideas:

- Partitioned Knowledge: Knowledge is partitioned utilizing a novel temporal partitioning technique mixed with an occasion bucketing strategy to effectively handle bursty workloads and streamline queries.

- Versatile Storage: The service is designed to combine with varied storage backends, together with Apache Cassandra and Elasticsearch, permitting Netflix to customise storage options primarily based on particular use case necessities.

- Configurability: TimeSeries affords a variety of tunable choices for every dataset, offering the flexibleness wanted to accommodate a big selection of use circumstances.

- Scalability: The structure helps each horizontal and vertical scaling, enabling the system to deal with growing throughput and knowledge volumes as Netflix expands its consumer base and providers.

- Sharded Infrastructure: Leveraging the Knowledge Gateway Platform, we will deploy single-tenant and/or multi-tenant infrastructure with the mandatory entry and visitors isolation.

Let’s dive into the varied features of this abstraction.

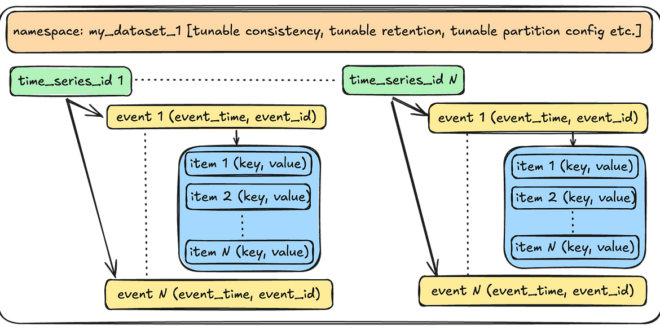

We comply with a novel occasion knowledge mannequin that encapsulates all the information we need to seize for occasions, whereas permitting us to question them effectively.

Let’s begin with the smallest unit of information within the abstraction and work our means up.

- Occasion Merchandise: An occasion merchandise is a key-value pair that customers use to retailer knowledge for a given occasion. For instance: {“device_type”: “ios”}.

- Occasion: An occasion is a structured assortment of a number of such occasion gadgets. An occasion happens at a particular cut-off date and is recognized by a client-generated timestamp and an occasion identifier (equivalent to a UUID). This mixture of event_time and event_id additionally varieties a part of the distinctive idempotency key for the occasion, enabling customers to securely retry requests.

- Time Collection ID: A time_series_id is a set of a number of such occasions over the dataset’s retention interval. For example, a device_id would retailer all occasions occurring for a given machine over the retention interval. All occasions are immutable, and the TimeSeries service solely ever appends occasions to a given time collection ID.

- Namespace: A namespace is a set of time collection IDs and occasion knowledge, representing the entire TimeSeries dataset. Customers can create a number of namespaces for every of their use circumstances. The abstraction applies varied tunable choices on the namespace degree, which we are going to focus on additional after we discover the service’s management airplane.

The abstraction gives the next APIs to work together with the occasion knowledge.

- WriteEventRecordsSync: This endpoint writes a batch of occasions and sends again a sturdiness acknowledgement to the shopper. That is utilized in circumstances the place customers require a assure of sturdiness.

- WriteEventRecords: That is the fire-and-forget model of the above endpoint. It enqueues a batch of occasions with out the sturdiness acknowledgement. That is utilized in circumstances like logging or tracing, the place customers care extra about throughput and may tolerate a small quantity of information loss.

{

"namespace": "my_dataset",

"occasions": [

{

"timeSeriesId": "profile100",

"eventTime": "2024-10-03T21:24:23.988Z",

"eventId": "550e8400-e29b-41d4-a716-446655440000",

"eventItems": [

{

"eventItemKey": "ZGV2aWNlVHlwZQ==",

"eventItemValue": "aW9z"

},

{

"eventItemKey": "ZGV2aWNlTWV0YWRhdGE=",

"eventItemValue": "c29tZSBtZXRhZGF0YQ=="

}

]

},

{

"timeSeriesId": "profile100",

"eventTime": "2024-10-03T21:23:30.000Z",

"eventId": "123e4567-e89b-12d3-a456-426614174000",

"eventItems": [

{

"eventItemKey": "ZGV2aWNlVHlwZQ==",

"eventItemValue": "YW5kcm9pZA=="

}

]

}

]

}

ReadEventRecords: Given a mixture of a namespace, a timeSeriesId, a timeInterval, and non-obligatory eventFilters, this endpoint returns all of the matching occasions, sorted descending by event_time, with low millisecond latency.

{

"namespace": "my_dataset",

"timeSeriesId": "profile100",

"timeInterval": {

"begin": "2024-10-02T21:00:00.000Z",

"finish": "2024-10-03T21:00:00.000Z"

},

"eventFilters": [

{

"matchEventItemKey": "ZGV2aWNlVHlwZQ==",

"matchEventItemValue": "aW9z"

}

],

"pageSize": 100,

"totalRecordLimit": 1000

}

SearchEventRecords: Given a search standards and a time interval, this endpoint returns all of the matching occasions. These use circumstances are high quality with ultimately constant reads.

{

"namespace": "my_dataset",

"timeInterval": {

"begin": "2024-10-02T21:00:00.000Z",

"finish": "2024-10-03T21:00:00.000Z"

},

"searchQuery": {

"booleanQuery": {

"searchQuery": [

{

"equals": {

"eventItemKey": "deviceType",

"eventItemValue": "aW9z"

}

},

{

"equals": {

"eventItemKey": "deviceType",

"eventItemValue": "YW5kcm9pZA=="

}

}

],

"operator": "OR"

}

},

"pageSize": 100,

"totalRecordLimit": 1000

}

AggregateEventRecords: Given a search standards and an aggregation mode (e.g. DistinctAggregation) , this endpoint performs the given aggregation inside a given time interval. Just like the Search endpoint, customers can tolerate eventual consistency and a doubtlessly greater latency (in seconds).

{

"namespace": "my_dataset",

"timeInterval": {

"begin": "2024-10-02T21:00:00.000Z",

"finish": "2024-10-03T21:00:00.000Z"

},

"searchQuery": {...some search standards...},

"aggregationQuery": {

"distinct": {

"eventItemKey": "deviceType",

"pageSize": 100

}

}

}

Within the subsequent sections, we are going to discuss how we work together with this knowledge on the storage layer.

The storage layer for TimeSeries includes a main knowledge retailer and an non-obligatory index knowledge retailer. The first knowledge retailer ensures knowledge sturdiness throughout writes and is used for main learn operations, whereas the index knowledge retailer is utilized for search and combination operations. At Netflix, Apache Cassandra is the popular selection for storing sturdy knowledge in high-throughput eventualities, whereas Elasticsearch is the popular knowledge retailer for indexing. Nonetheless, just like our strategy with the API, the storage layer isn’t tightly coupled to those particular knowledge shops. As a substitute, we outline storage API contracts that should be fulfilled, permitting us the flexibleness to exchange the underlying knowledge shops as wanted.

On this part, we are going to discuss how we leverage Apache Cassandra for TimeSeries use circumstances.

Partitioning Scheme

At Netflix’s scale, the continual inflow of occasion knowledge can shortly overwhelm conventional databases. Temporal partitioning addresses this problem by dividing the information into manageable chunks primarily based on time intervals, equivalent to hourly, day by day, or month-to-month home windows. This strategy allows environment friendly querying of particular time ranges with out the necessity to scan the whole dataset. It additionally permits Netflix to archive, compress, or delete older knowledge effectively, optimizing each storage and question efficiency. Moreover, this partitioning mitigates the efficiency points sometimes related to broad partitions in Cassandra. By using this technique, we will function at a lot greater disk utilization, because it reduces the necessity to reserve massive quantities of disk house for compactions, thereby saving prices.

Here’s what it seems like :

Time Slice: A time slice is the unit of information retention and maps on to a Cassandra desk. We create a number of such time slices, every overlaying a particular interval of time. An occasion lands in one in all these slices primarily based on the event_time. These slices are joined with no time gaps in between, with operations being start-inclusive and end-exclusive, guaranteeing that each one knowledge lands in one of many slices.

Why not use row-based Time-To-Stay (TTL)?

Utilizing TTL on particular person occasions would generate a major variety of tombstones in Cassandra, degrading efficiency, particularly throughout vary scans. By using discrete time slices and dropping them, we keep away from the tombstone situation completely. The tradeoff is that knowledge could also be retained barely longer than obligatory, as a whole desk’s time vary should fall outdoors the retention window earlier than it may be dropped. Moreover, TTLs are tough to regulate later, whereas TimeSeries can lengthen the dataset retention immediately with a single management airplane operation.

Time Buckets: Inside a time slice, knowledge is additional partitioned into time buckets. This facilitates efficient vary scans by permitting us to focus on particular time buckets for a given question vary. The tradeoff is that if a consumer desires to learn the whole vary of information over a big time interval, we should scan many partitions. We mitigate potential latency by scanning these partitions in parallel and aggregating the information on the finish. Usually, the benefit of concentrating on smaller knowledge subsets outweighs the learn amplification from these scatter-gather operations. Sometimes, customers learn a smaller subset of information moderately than the whole retention vary.

Occasion Buckets: To handle extraordinarily high-throughput write operations, which can end in a burst of writes for a given time collection inside a brief interval, we additional divide the time bucket into occasion buckets. This prevents overloading the identical partition for a given time vary and likewise reduces partition sizes additional, albeit with a slight improve in learn amplification.

Observe: With Cassandra 4.x onwards, we discover a considerable enchancment within the efficiency of scanning a variety of information in a large partition. See Future Enhancements on the finish to see the Dynamic Occasion bucketing work that goals to reap the benefits of this.

Storage Tables

We use two sorts of tables

- Knowledge tables: These are the time slices that retailer the precise occasion knowledge.

- Metadata desk: This desk shops details about how every time slice is configured per namespace.

Knowledge tables

The partition key allows splitting occasions for a time_series_id over a variety of time_bucket(s) and event_bucket(s), thus mitigating sizzling partitions, whereas the clustering key permits us to maintain knowledge sorted on disk within the order we nearly all the time need to learn it. The value_metadata column shops metadata for the event_item_value equivalent to compression.

Writing to the information desk:

Person writes will land in a given time slice, time bucket, and occasion bucket as an element of the event_time hooked up to the occasion. This issue is dictated by the management airplane configuration of a given namespace.

For instance:

Studying from the information desk:

The under illustration depicts at a high-level on how we scatter-gather the reads from a number of partitions and be part of the consequence set on the finish to return the ultimate consequence.

Metadata desk

This desk shops the configuration knowledge in regards to the time slices for a given namespace.

Observe the next:

- No Time Gaps: The end_time of a given time slice overlaps with the start_time of the following time slice, guaranteeing all occasions discover a dwelling.

- Retention: The standing signifies which tables fall inside and out of doors of the retention window.

- Versatile: This metadata could be adjusted per time slice, permitting us to tune the partition settings of future time slices primarily based on noticed knowledge patterns within the present time slice.

There may be much more data that may be saved into the metadata column (e.g., compaction settings for the desk), however we solely present the partition settings right here for brevity.

To help secondary entry patterns by way of non-primary key attributes, we index knowledge into Elasticsearch. Customers can configure an inventory of attributes per namespace that they want to search and/or combination knowledge on. The service extracts these fields from occasions as they stream in, indexing the resultant paperwork into Elasticsearch. Relying on the throughput, we might use Elasticsearch as a reverse index, retrieving the complete knowledge from Cassandra, or we might retailer the whole supply knowledge instantly in Elasticsearch.

Observe: Once more, customers are by no means instantly uncovered to Elasticsearch, similar to they don’t seem to be instantly uncovered to Cassandra. As a substitute, they work together with the Search and Combination API endpoints that translate a given question to that wanted for the underlying datastore.

Within the subsequent part, we are going to discuss how we configure these knowledge shops for various datasets.

The information airplane is accountable for executing the learn and write operations, whereas the management airplane configures each side of a namespace’s habits. The information airplane communicates with the TimeSeries management stack, which manages this configuration data. In flip, the TimeSeries management stack interacts with a sharded Knowledge Gateway Platform Management Airplane that oversees management configurations for all abstractions and namespaces.

Separating the obligations of the information airplane and management airplane helps preserve the excessive availability of our knowledge airplane, because the management airplane takes on duties which will require some type of schema consensus from the underlying knowledge shops.

The under configuration snippet demonstrates the immense flexibility of the service and the way we will tune a number of issues per namespace utilizing our management airplane.

"persistence_configuration": [

{

"id": "PRIMARY_STORAGE",

"physical_storage": {

"type": "CASSANDRA", // type of primary storage

"cluster": "cass_dgw_ts_tracing", // physical cluster name

"dataset": "tracing_default" // maps to the keyspace

},

"config": {

"timePartition": {

"secondsPerTimeSlice": "129600", // width of a time slice

"secondPerTimeBucket": "3600", // width of a time bucket

"eventBuckets": 4 // how many event buckets within

},

"queueBuffering": {

"coalesce": "1s", // how long to coalesce writes

"bufferCapacity": 4194304 // queue capacity in bytes

},

"consistencyScope": "LOCAL", // single-region/multi-region

"consistencyTarget": "EVENTUAL", // read/write consistency

"acceptLimit": "129600s" // how far back writes are allowed

},

"lifecycleConfigs": {

"lifecycleConfig": [ // Primary store data retention

{

"type": "retention",

"config": {

"close_after": "1296000s", // close for reads/writes

"delete_after": "1382400s" // drop time slice

}

}

]

}

},

{

"id": "INDEX_STORAGE",

"physicalStorage": {

"kind": "ELASTICSEARCH", // kind of index storage

"cluster": "es_dgw_ts_tracing", // ES cluster identify

"dataset": "tracing_default_useast1" // base index identify

},

"config": {

"timePartition": {

"secondsPerSlice": "129600" // width of the index slice

},

"consistencyScope": "LOCAL",

"consistencyTarget": "EVENTUAL", // how ought to we learn/write knowledge

"acceptLimit": "129600s", // how far again writes are allowed

"indexConfig": {

"fieldMapping": { // fields to extract to index

"tags.nf.app": "KEYWORD",

"tags.length": "INTEGER",

"tags.enabled": "BOOLEAN"

},

"refreshInterval": "60s" // Index associated settings

}

},

"lifecycleConfigs": {

"lifecycleConfig": [

{

"type": "retention", // Index retention settings

"config": {

"close_after": "1296000s",

"delete_after": "1382400s"

}

}

]

}

}

]

With so many various parameters, we want automated provisioning workflows to infer one of the best settings for a given workload. When customers need to create their namespaces, they specify an inventory of workload needs, which the automation interprets into concrete infrastructure and associated management airplane configuration. We extremely encourage you to observe this ApacheCon speak, by one in all our beautiful colleagues Joey Lynch, on how we obtain this. We might go into element on this topic in one in all our future weblog posts.

As soon as the system provisions the preliminary infrastructure, it then scales in response to the consumer workload. The following part describes how that is achieved.

Our customers might function with restricted data on the time of provisioning their namespaces, leading to best-effort provisioning estimates. Additional, evolving use-cases might introduce new throughput necessities over time. Right here’s how we handle this:

- Horizontal scaling: TimeSeries server situations can auto-scale up and down as per hooked up scaling insurance policies to fulfill the visitors demand. The storage server capability could be recomputed to accommodate altering necessities utilizing our capability planner.

- Vertical scaling: We can also select to vertically scale our TimeSeries server situations or our storage situations to get higher CPU, RAM and/or hooked up storage capability.

- Scaling disk: We might connect EBS to retailer knowledge if the capability planner prefers infrastructure that gives bigger storage at a decrease price moderately than SSDs optimized for latency. In such circumstances, we deploy jobs to scale the EBS quantity when the disk storage reaches a sure share threshold.

- Re-partitioning knowledge: Inaccurate workload estimates can result in over or under-partitioning of our datasets. TimeSeries control-plane can modify the partitioning configuration for upcoming time slices, as soon as we notice the character of information within the wild (by way of partition histograms). Sooner or later we plan to help re-partitioning of older knowledge and dynamic partitioning of present knowledge.

Up to now, now we have seen how TimeSeries shops, configures and interacts with occasion datasets. Let’s see how we apply totally different strategies to enhance the efficiency of our operations and supply higher ensures.

We choose to bake in idempotency in all mutation endpoints, in order that customers can retry or hedge their requests safely. Hedging is when the shopper sends an equivalent competing request to the server, if the unique request doesn’t come again with a response in an anticipated period of time. The shopper then responds with whichever request completes first. That is performed to maintain the tail latencies for an software comparatively low. This may solely be performed safely if the mutations are idempotent. For TimeSeries, the mixture of event_time, event_id and event_item_key kind the idempotency key for a given time_series_id occasion.

We assign Service Stage Targets (SLO) targets for various endpoints inside TimeSeries, as a sign of what we predict the efficiency of these endpoints ought to be for a given namespace. We are able to then hedge a request if the response doesn’t come again in that configured period of time.

"slos": {

"learn": { // SLOs per endpoint

"latency": {

"goal": "0.5s", // hedge round this quantity

"max": "1s" // time-out round this quantity

}

},

"write": {

"latency": {

"goal": "0.01s",

"max": "0.05s"

}

}

}

Generally, a shopper could also be delicate to latency and prepared to simply accept a partial consequence set. An actual-world instance of that is real-time frequency capping. Precision isn’t crucial on this case, but when the response is delayed, it turns into virtually ineffective to the upstream shopper. Subsequently, the shopper prefers to work with no matter knowledge has been collected thus far moderately than timing out whereas ready for all the information. The TimeSeries shopper helps partial returns round SLOs for this function. Importantly, we nonetheless preserve the newest order of occasions on this partial fetch.

All reads begin with a default fanout issue, scanning 8 partition buckets in parallel. Nonetheless, if the service layer determines that the time_series dataset is dense — i.e., most reads are glad by studying the primary few partition buckets — then it dynamically adjusts the fanout issue of future reads so as to cut back the learn amplification on the underlying datastore. Conversely, if the dataset is sparse, we might need to improve this restrict with an inexpensive higher certain.

Usually, the lively vary for writing knowledge is smaller than the vary for studying knowledge — i.e., we wish a variety of time to develop into immutable as quickly as attainable in order that we will apply optimizations on prime of it. We management this by having a configurable “acceptLimit” parameter that forestalls customers from writing occasions older than this time restrict. For instance, an settle for restrict of 4 hours implies that customers can not write occasions older than now() — 4 hours. We generally elevate this restrict for backfilling historic knowledge, however it’s tuned again down for normal write operations. As soon as a variety of information turns into immutable, we will safely do issues like caching, compressing, and compacting it for reads.

We regularly leverage this service for dealing with bursty workloads. Quite than overwhelming the underlying datastore with this load unexpectedly, we intention to distribute it extra evenly by permitting occasions to coalesce over quick durations (sometimes seconds). These occasions accumulate in in-memory queues operating on every occasion. Devoted customers then steadily drain these queues, grouping the occasions by their partition key, and batching the writes to the underlying datastore.

The queues are tailor-made to every datastore since their operational traits rely on the particular datastore being written to. For example, the batch dimension for writing to Cassandra is considerably smaller than that for indexing into Elasticsearch, resulting in totally different drain charges and batch sizes for the related customers.

Whereas utilizing in-memory queues does improve JVM rubbish assortment, now we have skilled substantial enhancements by transitioning to JDK 21 with ZGC. For example the influence, ZGC has lowered our tail latencies by a powerful 86%:

As a result of we use in-memory queues, we’re susceptible to dropping occasions in case of an occasion crash. As such, these queues are solely used to be used circumstances that may tolerate some quantity of information loss .e.g. tracing/logging. To be used circumstances that want assured sturdiness and/or read-after-write consistency, these queues are successfully disabled and writes are flushed to the information retailer nearly instantly.

As soon as a time slice exits the lively write window, we will leverage the immutability of the information to optimize it for learn efficiency. This course of might contain re-compacting immutable knowledge utilizing optimum compaction methods, dynamically shrinking and/or splitting shards to optimize system sources, and different comparable strategies to make sure quick and dependable efficiency.

The next part gives a glimpse into the real-world efficiency of a few of our TimeSeries datasets.

The service can write knowledge within the order of low single digit milliseconds

whereas persistently sustaining secure point-read latencies:

On the time of penning this weblog, the service was processing near 15 million occasions/second throughout all of the totally different datasets at peak globally.

The TimeSeries Abstraction performs an important function throughout key providers at Netflix. Listed here are some impactful use circumstances:

- Tracing and Insights: Logs traces throughout all apps and micro-services inside Netflix, to grasp service-to-service communication, assist in debugging of points, and reply help requests.

- Person Interplay Monitoring: Tracks thousands and thousands of consumer interactions — equivalent to video playbacks, searches, and content material engagement — offering insights that improve Netflix’s suggestion algorithms in real-time and enhance the general consumer expertise.

- Function Rollout and Efficiency Evaluation: Tracks the rollout and efficiency of recent product options, enabling Netflix engineers to measure how customers have interaction with options, which powers data-driven selections about future enhancements.

- Asset Impression Monitoring and Optimization: Tracks asset impressions guaranteeing content material and belongings are delivered effectively whereas offering real-time suggestions for optimizations.

- Billing and Subscription Administration: Shops historic knowledge associated to billing and subscription administration, guaranteeing accuracy in transaction data and supporting customer support inquiries.

and extra…

Because the use circumstances evolve, and the necessity to make the abstraction even less expensive grows, we intention to make many enhancements to the service within the upcoming months. A few of them are:

- Tiered Storage for Value Effectivity: Help shifting older, lesser-accessed knowledge into cheaper object storage that has greater time to first byte, doubtlessly saving Netflix thousands and thousands of {dollars}.

- Dynamic Occasion Bucketing: Help real-time partitioning of keys into optimally-sized partitions as occasions stream in, moderately than having a considerably static configuration on the time of provisioning a namespace. This technique has an enormous benefit of not partitioning time_series_ids that don’t want it, thus saving the general price of learn amplification. Additionally, with Cassandra 4.x, now we have famous main enhancements in studying a subset of information in a large partition that might lead us to be much less aggressive with partitioning the whole dataset forward of time.

- Caching: Make the most of immutability of information and cache it intelligently for discrete time ranges.

- Rely and different Aggregations: Some customers are solely excited by counting occasions in a given time interval moderately than fetching all of the occasion knowledge for it.

The TimeSeries Abstraction is a crucial element of Netflix’s on-line knowledge infrastructure, taking part in an important function in supporting each real-time and long-term decision-making. Whether or not it’s monitoring system efficiency throughout high-traffic occasions or optimizing consumer engagement via habits analytics, TimeSeries Abstraction ensures that Netflix operates seamlessly and effectively on a worldwide scale.

As Netflix continues to innovate and increase into new verticals, the TimeSeries Abstraction will stay a cornerstone of our platform, serving to us push the boundaries of what’s attainable in streaming and past.

Keep tuned for Half 2, the place we’ll introduce our Distributed Counter Abstraction, a key component of Netflix’s Composite Abstractions, constructed on prime of the TimeSeries Abstraction.

Particular because of our beautiful colleagues who contributed to TimeSeries Abstraction’s success: Tom DeVoe Mengqing Wang, Kartik Sathyanarayanan, Jordan West, Matt Lehman, Cheng Wang, Chris Lohfink .

Source link